Correspondence Zwart ++

Showing

- reply_Zwart2020/Makefile 2 additions, 2 deletionsreply_Zwart2020/Makefile

- reply_Zwart2020/correspondence.md 30 additions, 24 deletionsreply_Zwart2020/correspondence.md

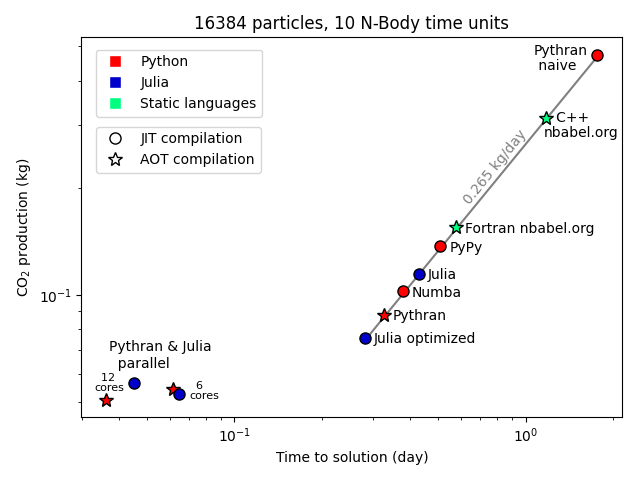

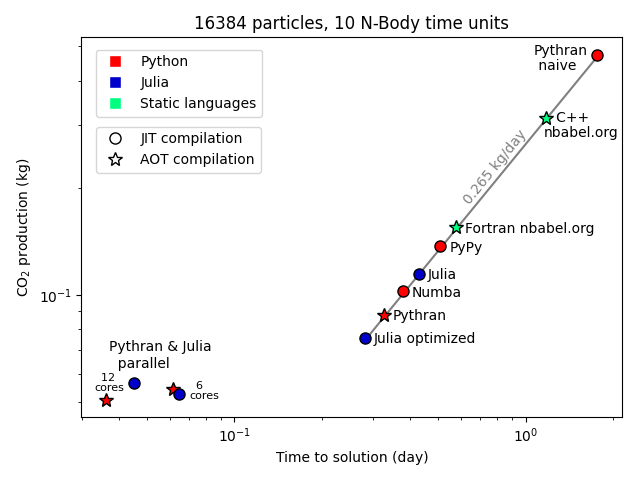

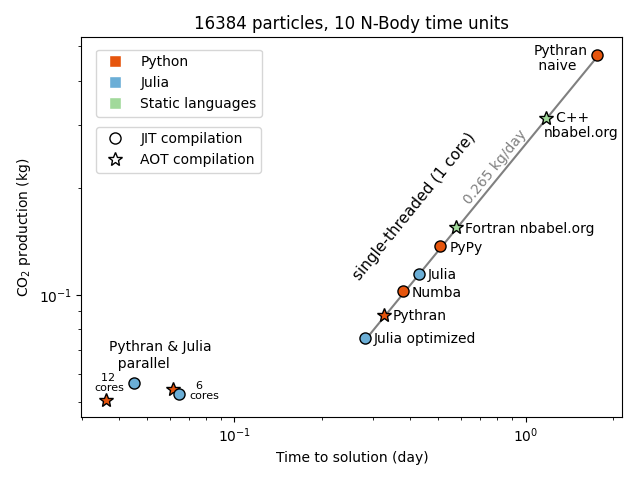

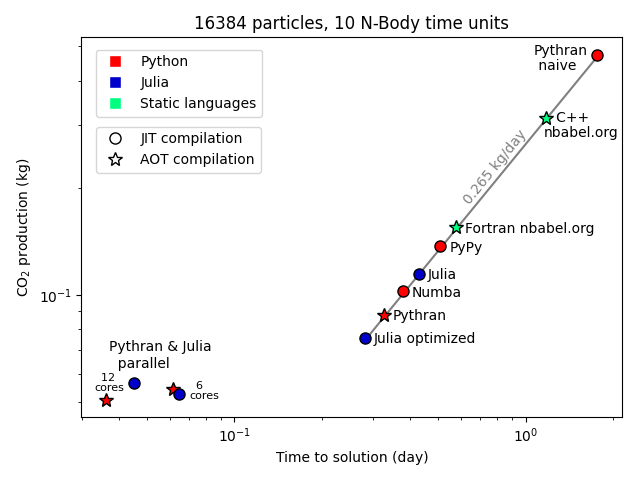

- reply_Zwart2020/figs/fig_bench_nbabel_parallel.png 0 additions, 0 deletionsreply_Zwart2020/figs/fig_bench_nbabel_parallel.png

- reply_Zwart2020/pubs.bib 11 additions, 0 deletionsreply_Zwart2020/pubs.bib

| W: | H:

| W: | H: